LLM04

BONUS TECH DECODER

Backdoor:

A hidden trigger in an AI that activates harmful behavior, like a secret password that unlocks trouble.

Fine-tune:

Teaching an already-trained AI new skills or knowledge, like giving advanced lessons to someone who knows the basics.

Model Weights:

The "memory muscles" of an AI that determine how it responds to information based on its training.

Adversarial Testing:

Deliberately trying to trick an AI with challenging inputs to find weaknesses, like testing locks before thieves do.

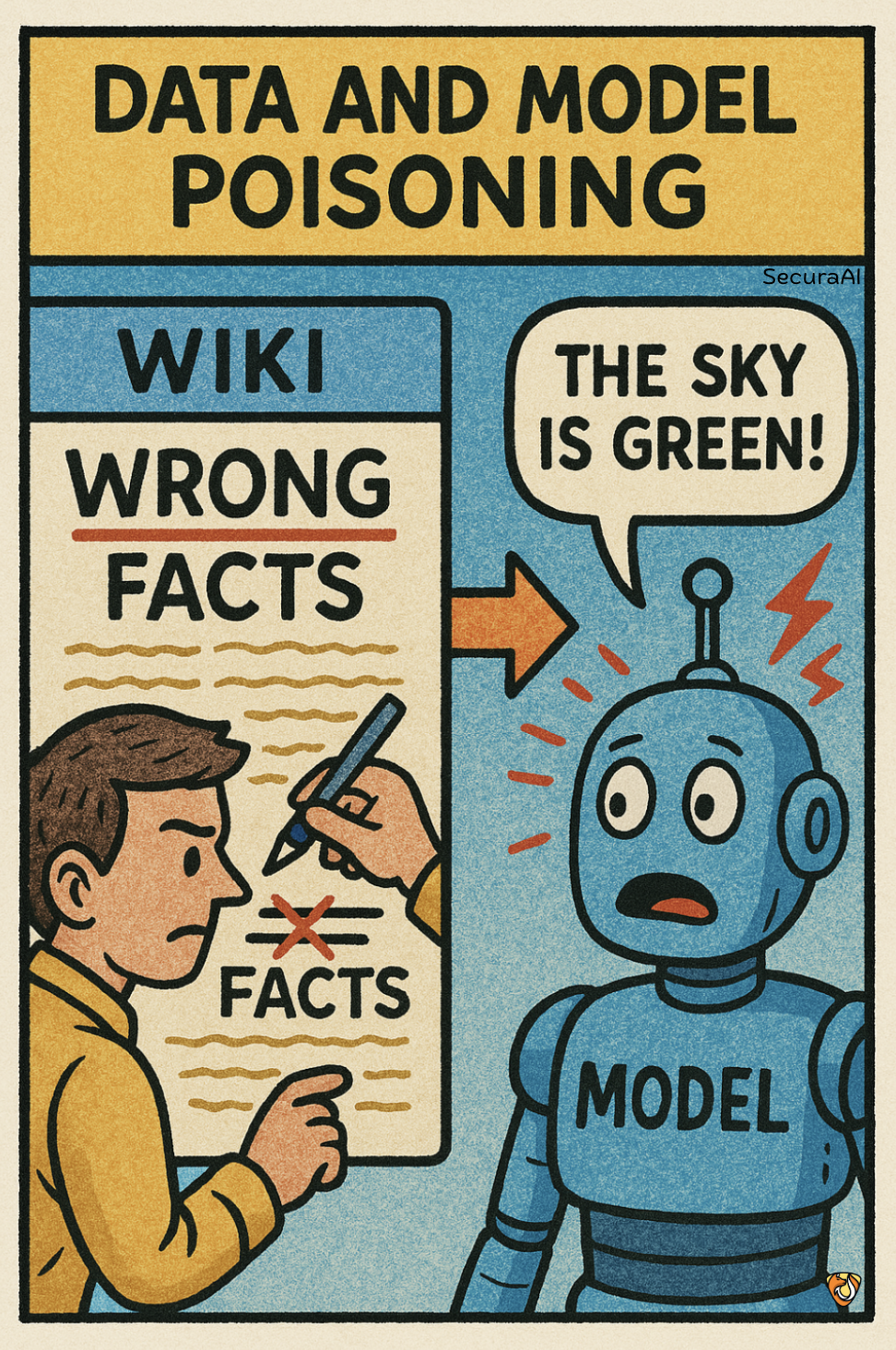

🧠 WHAT IS IT?

Data and Model Poisoning occurs when an attacker deliberately corrupts the information used to train an AI or manipulates the model itself, causing it to learn harmful behaviors or vulnerabilities. It's like tampering with a child's textbooks so they learn incorrect information that benefits someone with bad intentions. Once poisoned, the AI may contain backdoors, biases, or security weaknesses that are extremely difficult to detect and remove.

🔍 HOW IT HAPPENS

- Attackers inject malicious examples into data used for training or fine-tuning AI models

- Alternatively, attackers might directly manipulate model weights or parameters during development

- The poisoned data or model creates specific harmful responses to certain triggers

- These vulnerabilities remain dormant until activated by specific inputs, making them difficult to detect

🚨 WHY IT MATTERS

Poisoned models may contain hidden backdoors that can later be exploited to bypass security, generate harmful content, or leak sensitive information. These vulnerabilities can persist across deployments and potentially affect numerous downstream applications, creating long-term security risks.

🛡️ HOW TO PREVENT IT

- Implement rigorous data validation and cleaning procedures before using any data for training

- Secure the model development pipeline with strong access controls and integrity verification

- Use diverse data sources to minimize the impact of any single contaminated source

- Conduct adversarial testing specifically designed to detect potential backdoors or biases