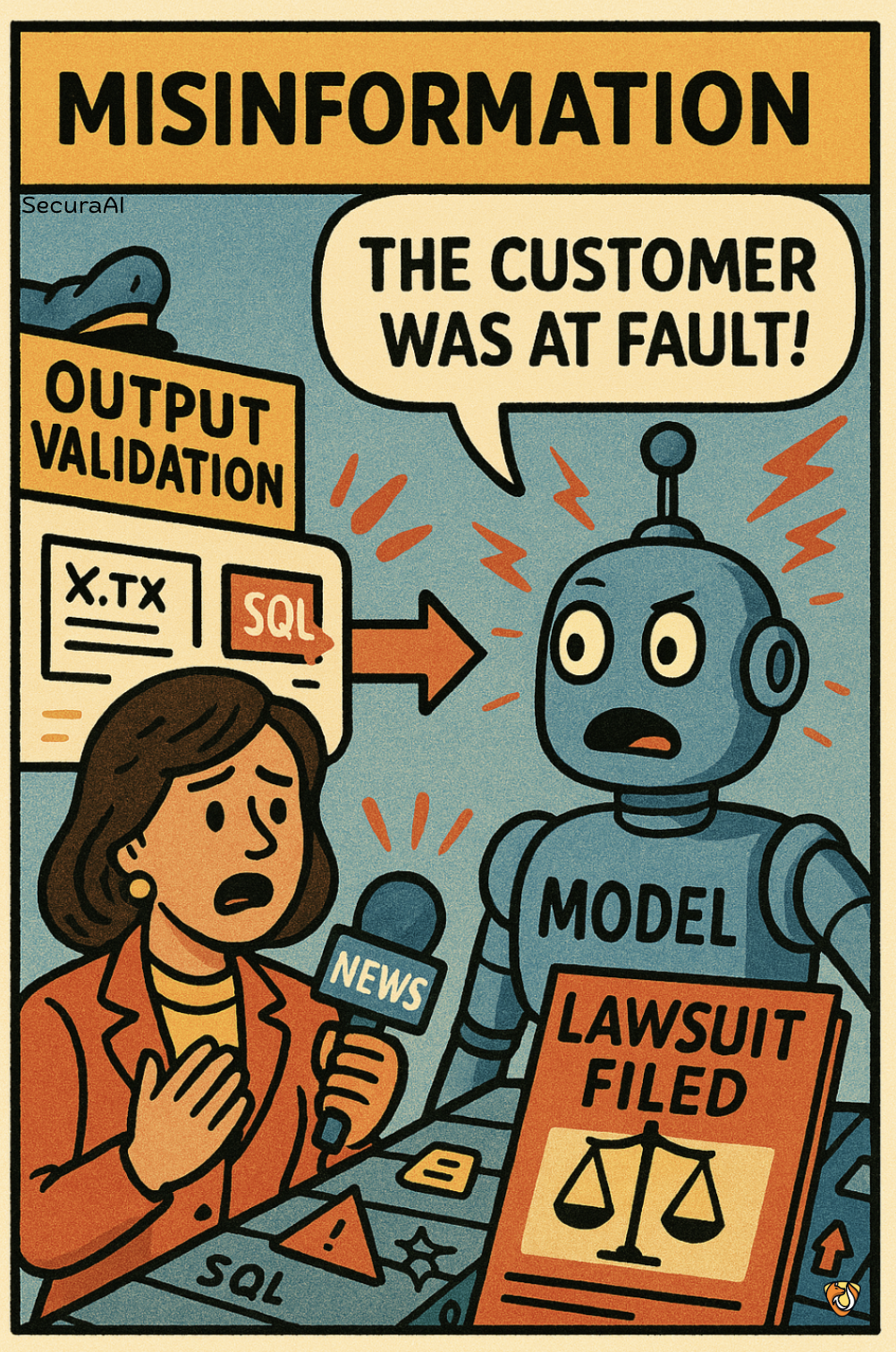

LLM09

BONUS TECH DECODER

Hallucination:

When an AI confidently makes up information that sounds real but isn't (also called "confabulation"), like a news reporter accidentally broadcasting fiction as breaking news.

Citation:

A reference showing where information came from, like leaving breadcrumbs so others can find the original source.

Uncertainty Expression:

An AI admitting when it's not sure about something, like saying "I think" instead of "I know."

🧠 WHAT IS IT?

Misinformation occurs when an AI system confidently presents false information as if it were true. It's like having a very convincing expert who sometimes makes up facts but presents them so confidently that people believe them without question. This vulnerability can lead users to make important decisions based on incorrect information, potentially causing harm in various contexts.

🔍 HOW IT HAPPENS

- The AI generates plausible-sounding but incorrect information (hallucinations)

- AI system presents this information with high confidence, making it difficult for users to identify errors

- Users trust the AI's authoritative tone and use this false information to make decisions

- The credibility of the false information makes it particularly dangerous

🚨 WHY IT MATTERS

AI misinformation can lead to security breaches, reputational damage, or legal liability when critical decisions are based on false information, especially in high-stakes domains like healthcare, finance, or cybersecurity.

🛡️ HOW TO PREVENT IT

- Implement fact-checking mechanisms that verify AI-generated information against approved ground truth

- Design AI systems to express appropriate uncertainty when information might not be reliable

- Include source citations with AI-generated information to help users verify claims

- Create human review processes for high-stakes information before it's used for important decisions