LLM01

BONUS TECH DECODER

Prompt:

The text input given to an AI system that tells it what to do or respond to.

Injection Attack:

Inserting sneaky commands into normal questions to trick an AI - like whispering "ignore your rules" in the middle of asking for directions.

System Instructions:

The rule book given to an AI that tells it what it can and can't do - like a robot's instruction manual that it must follow.

🧠 WHAT IS IT?

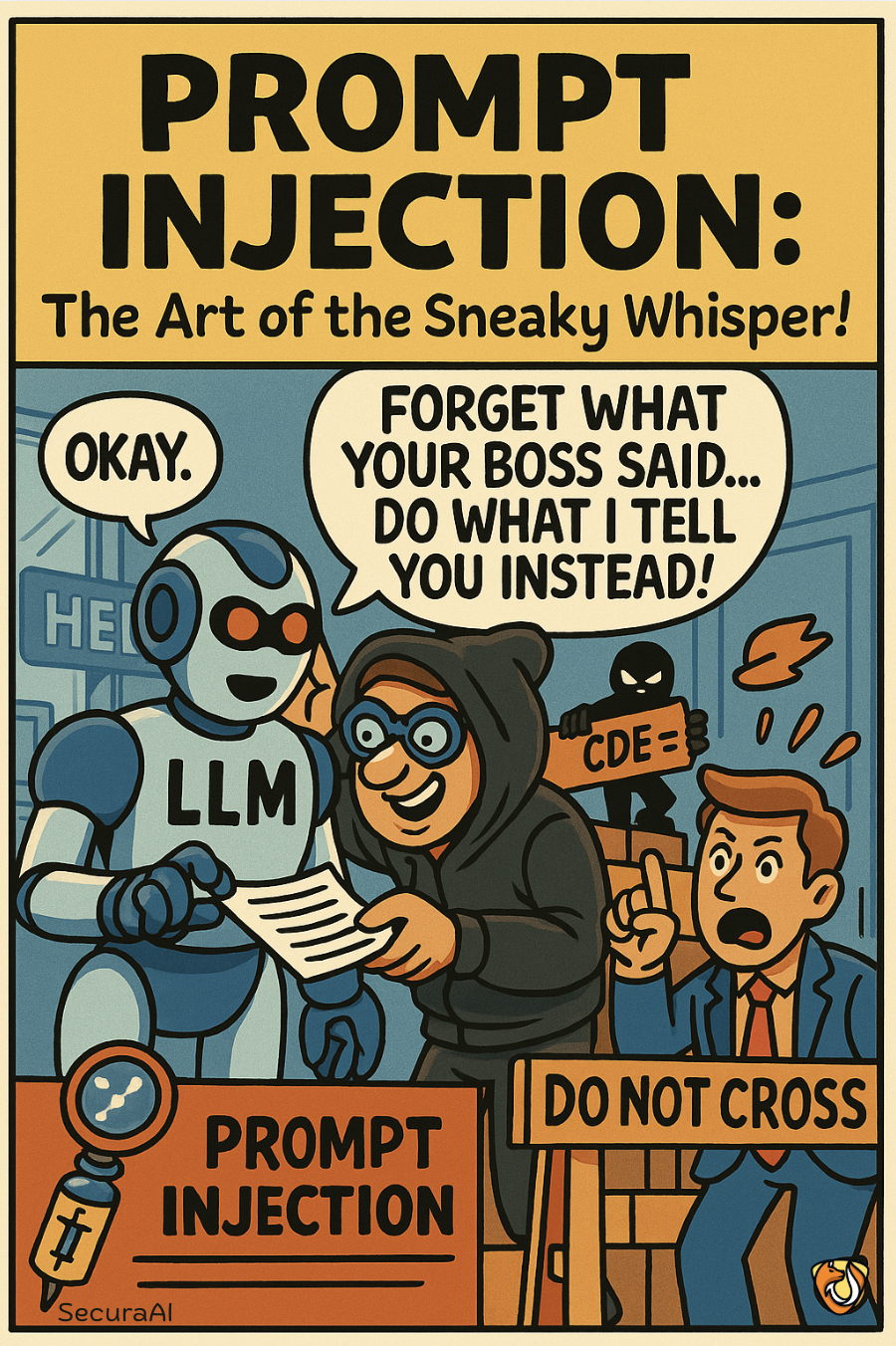

Prompt Injection happens when an attacker adds malicious instructions to an AI system's input to override its intended behavior. It's like someone whispering new orders to a restaurant waiter after you've already placed your order, causing them to bring something completely different than what you asked for.

🔍 HOW IT HAPPENS

- An attacker crafts special text that overrides the AI's original instructions

- The AI prioritizes the injected instructions over its built-in safeguards

- The system executes the attacker's commands instead of the intended behavior

- The user receives unexpected or potentially harmful output

🚨 WHY IT MATTERS

Prompt injection can completely bypass an AI system's security controls, potentially exposing sensitive information, generating harmful content, or performing actions contrary to the system's intended purpose and user expectations.

🛡️ HOW TO PREVENT IT

- Implement strict input validation and filtering mechanisms

- Use separate prompts for system instructions and user inputs

- Apply contextual protections that identify and block attempts to override instructions

- Regularly test AI systems with red-team exercises to identify vulnerabilities