LLM02

BONUS TECH DECODER

Data Minimization:

Only collecting the data you actually need – like only asking for someone's name instead of their entire life story.

Differential Privacy:

The concept of adding small random changes to data so it's still useful overall but can't reveal details about any specific person.

PII (Personally Identifiable Information):

Data that can be used to identify a specific individual, such as names, social security numbers, or biometric records.

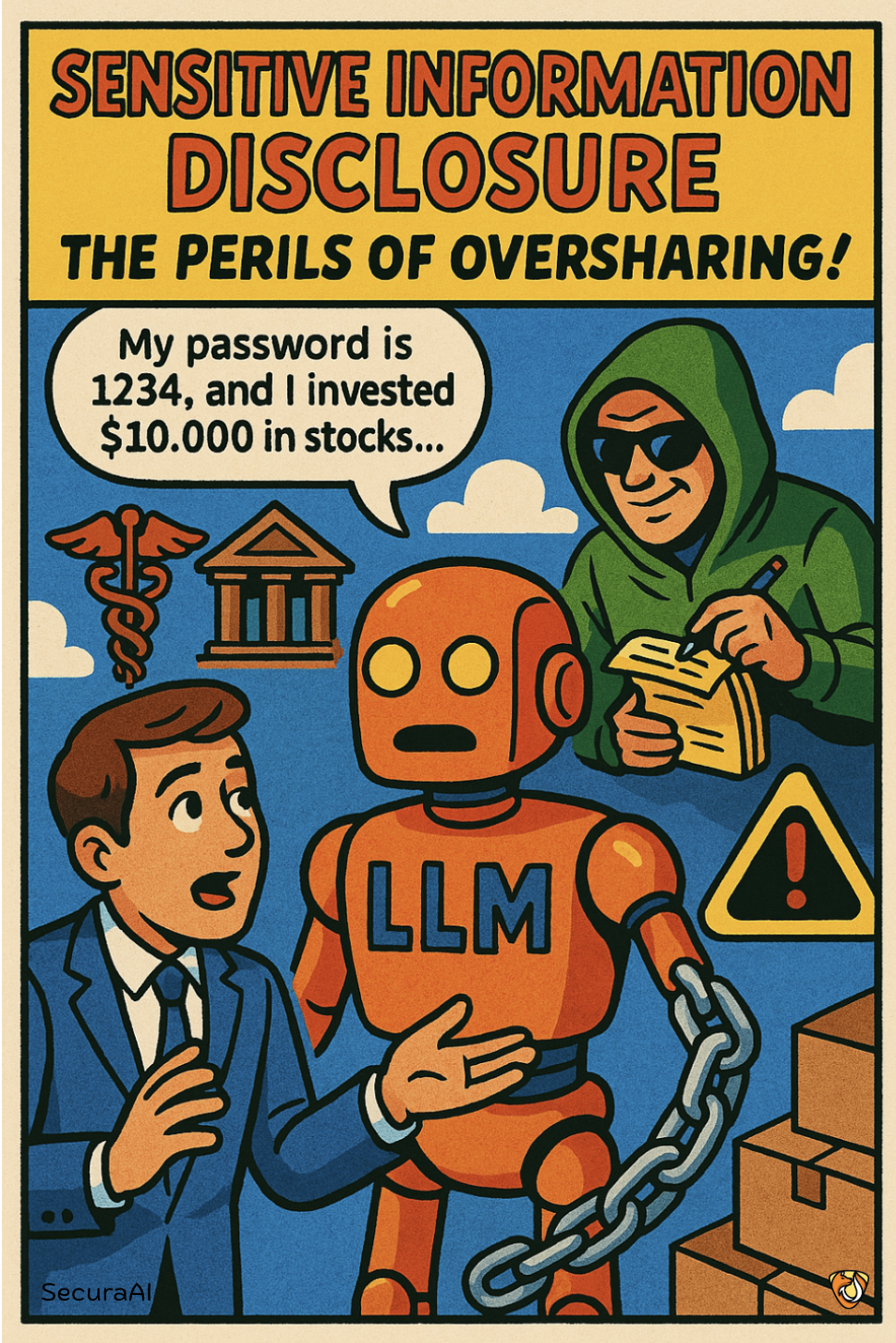

🧠 WHAT IS IT?

Sensitive Information Disclosure occurs when an AI system inadvertently reveals private, confidential, or restricted information that should remain hidden. It's like a bank teller accidentally showing another customer your account details when they shouldn't have access to that information at all.

🔍 HOW IT HAPPENS

- The AI system may be trained on data containing sensitive information

- Specific prompts can trigger the AI to recall and share this sensitive data

- The system fails to recognize that certain information should be kept private

- Crafty questioning techniques can extract confidential information piece by piece

🚨 WHY IT MATTERS

Disclosure of sensitive information can lead to privacy violations, identity theft, corporate espionage, or compliance failures. It undermines trust in AI systems and can result in significant legal, financial, and reputational damage for organizations.

🛡️ HOW TO PREVENT IT

- Implement data minimization — only train AI on necessary information

- Use privacy-enhancing techniques like differential privacy when training models

- Apply robust content filtering to detect and redact sensitive information in outputs

- Regularly audit AI responses for potential information leakage, especially with new prompts